Robotics developer Figure caused a stir Wednesday by sharing a video demonstration of the first humanoid robot to engage in real-time conversation thanks to OpenAI’s generative AI.

“With OpenAI, Figure 01 can now have full conversations with people.” Figure said Twitter emphasizes the ability to instantly understand and respond to human interaction.

The company recently described its partnership with OpenAI as providing robots with high levels of visual and verbal intelligence, allowing for “fast, low-level, dexterous robotic movements.”

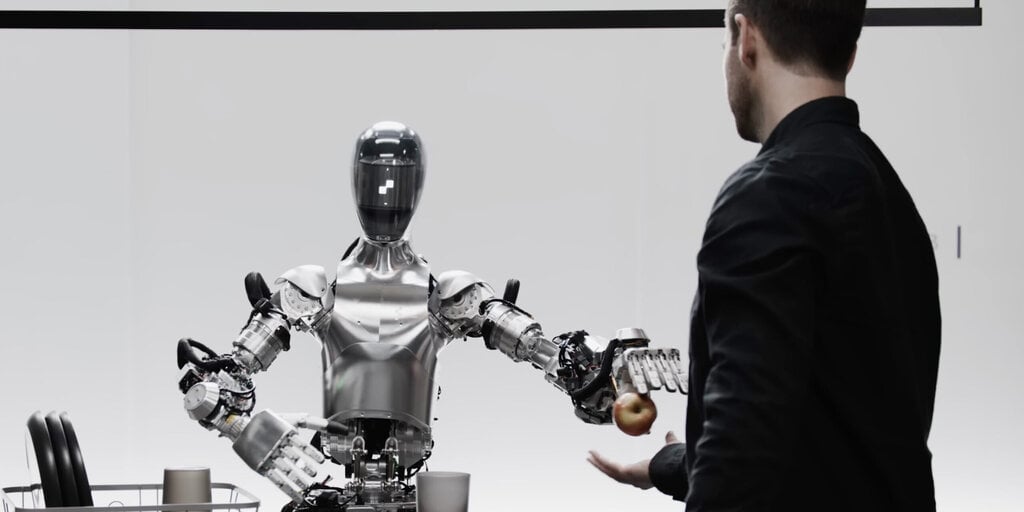

In the video, Figure 01 is interacting with the creator’s lead AI engineer, Corey Lynch, who gets the robot to perform several tasks in a makeshift kitchen, including identifying apples, plates, and cups.

Figure 01 shows that when Lynch asked the robot to give it something to eat, it identified an apple as food. Lynch then demonstrated the robot’s multitasking abilities by having Figure 01 gather trash into a basket and ask questions at the same time.

Lynch on Twitter explained Figure 01 Take a closer look at the project.

“Our robots can describe visual experiences, plan future actions, reflect on memories, and verbalize inferences,” he wrote in an extensive thread.

According to Lynch, it feeds images from the robot’s cameras and copies text from speech captured by its built-in microphone into a large-scale multimodal model trained by OpenAI.

Multimodal AI refers to artificial intelligence that can understand and create various data types such as text and images.

Lynch emphasized that the behavior in Figure 01 is learned, runs at normal speed, and is not remotely controlled.

“The model processes the entire conversation history, including historical images, to find verbal responses, which are then relayed back to the human via text-to-speech,” Lynch said. “The same model is responsible for determining the learned closed-loop actions to be executed by the robot to execute a given command, loading specific neural network weights onto the GPU, and executing policies.”

Lynch said Figure 01 was designed to succinctly depict its surroundings, “Common sense” It’s put on the shelf to make decisions like guessing what to cook. They can also analyze vague statements, such as being hungry, into actions, such as offering an apple, while also explaining their own actions.

The debut sparked an enthusiastic response on Twitter, with many people impressed by Figure 01’s capabilities and more than a few adding it to their list of milestones on the road to singularity.

“Please tell me your team has watched all the Terminator movies,” one person responded.

“We need to find John Connor as quickly as possible.” Another added:

For AI developers and researchers, Lynch provided a variety of technical details.

“All actions are driven by neural network visuomotor transducer policies that map pixels directly to actions,” Lynch said. “These networks take onboard images at 10hz and generate 24-DOF motion (wrist posture and finger joint angles) at 200hz.”

Figure 01’s impactful debut comes as policymakers and global leaders struggle to prevent AI tools from spreading to the mainstream. Most of the discussion has been about large-scale language models like OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude AI, but developers are also looking at ways to give AI a physical humanoid robot body.

Figure AI and OpenAI did not immediately respond. detoxification Request for comment.

“One is kind of a utilitarian goal, and that’s what Elon Musk and others are pursuing,” Ken Goldberg, a professor of industrial engineering at UC Berkeley, previously said. decryption. “A lot of the work that’s going on right now, which is why people invest in companies like Figure, is hoping that these things will work and be compatible,” he said, especially in the area of space exploration.

Along with Figure, other companies looking to combine AI and robotics include Hanson Robotics, which introduced its Desdemona AI robot in 2016.

“Just a few years ago, it was thought that having a full conversation with a humanoid robot while it was planning and performing fully learned actions would be decades away.” Corey Lynch, lead AI engineer at Figure AI, said on Twitter: “Obviously a lot has changed.”

Edited by Ryan Ozawa.

Stay up to date with cryptocurrency news and receive daily updates in your inbox.