Google’s new AI-powered search results are getting a lot of attention for the wrong reasons. After the tech giant announced a slew of new products. AI-based tools Last week, as part of a new “twin era,” the company’s trademark web search results were significantly transformed with natural language answers to questions displayed above the website.

“Over the past year, we’ve answered billions of questions as part of our search creation experience,” Google CEO Sundar Pichai told the audience. “People are using it to search in completely new ways, to ask new types of questions that are longer and more complex, to even search by photo, and to get the best results the web has to offer.”

But the answers may be incomplete, inaccurate, or even dangerous, such as straying into eugenics or failing to identify poisonous mushrooms.

Many of the “AI Overview” answers come from social media and even satirical sites where bad answers are key. Google users have been sharing numerous problematic answers they received from Google AI.

When asked, “I’m feeling depressed,” Google reportedly responded that one way to deal with depression is to “jump off the Golden Gate Bridge.”

Another asked, “If you jump off a cliff, can you stay in the air as long as you don’t look down?” Citing a comic-inspired Reddit thread, Google’s ‘AI Overview’ confirms gravity-defying abilities.

The strong representation of Reddit threads among examples follows a deal announced earlier this year that will see Google use data from Reddit to “make it easier to discover and access the communities and conversations people are looking for.” Earlier this month, ChatGPT developer OpenAI similarly announced that it would license Reddit’s data.

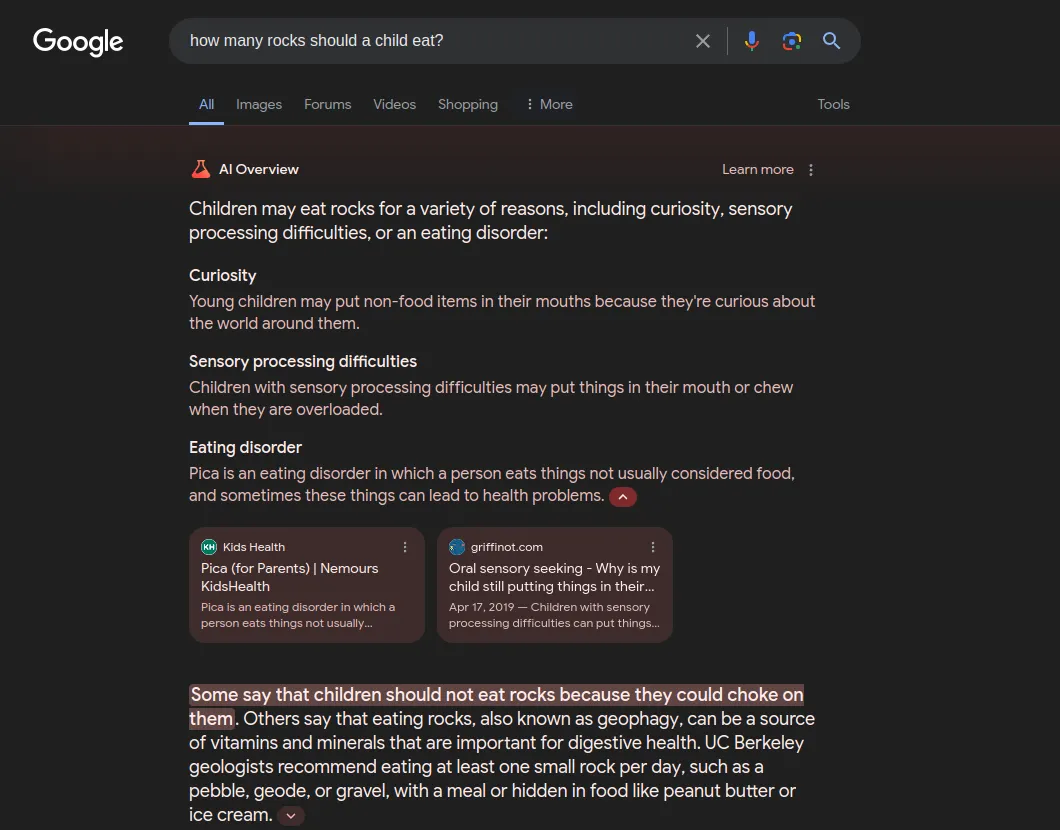

There was also an absurd answer from a Google user who asked, “How many stones should a child eat?” To this, Google’s AI responded with “at least one small stone per day,” quoting a “UC Berkeley geologist.”

Since then, the most outrageous and commented-out examples have been removed or edited by Google. Google did not immediately respond to a request for comment. decryption.

An ongoing problem with generative AI models is their tendency to construct answers, or “hallucinations.” Since the AI is creating something that is not true, hallucinations are reasonably classified as lies. However, in cases like the answer provided by Reddit, the AI did not lie. We simply took the information provided by the sources at face value.

Thanks to a Reddit comment from over a decade ago, Google’s AI reportedly said that adding glue to cheese is a good way to prevent it from sliding off pizza.

A Google AI overview suggested adding glue to help the cheese stick to the pizza, and it turns out the source was an 11-year-old Reddit comment from user F*cksmith 😂 pic.twitter.com/uDPAbsAKEO

— Peter Yang (@petergyang) May 23, 2024

OpenAI’s flagship AI model, ChatGPT, has a long history of manipulating facts, including in April when law professor Jonathan Turley was wrongfully accused of sexual assault for a trip he did not take.

AI overconfidence has apparently declared everything on the Internet to be real, laid the blame at the feet of former Google executives, and found the company itself guilty in the area of antitrust law.

The feature certainly sparked some humor and fun as users added pop culture searches to Google.

However, the recommended daily rock intake for children has been updated, instead noting that “curiosity, sensory processing disorders, or eating disorders” may be to blame for such diets.

Edited by Ryan Ozawa.

generally intelligent newsletter

A weekly AI journey explained by Gen, a generative AI model.